Artificial intelligence has been evolving rapidly, with breakthroughs in natural language processing, computer vision, and generative models. One of the most recent advancements is the Stable Diffusion Model, which has shown remarkable capabilities in generating realistic images. Compared to traditional approaches, ControlNet offers improved results and requires considerably less training time. In this article, we'll take a deep dive into what ControlNet is, how it works, and why it matters.

Stable Diffusion Models and Their Limitations

Stable Diffusion Models have been proven to be incredibly effective in generating realistic images from textual prompts. However, when it comes to specific subtasks or generating images with precise compositional elements, these models may fall short. The key challenge lies in training the model to generate images that adhere to additional conditions, such as incorporating depth maps in the final output. By enhancing stable diffusion models with additional input conditions, researchers can achieve more precise and accurate results, improving AI models' overall performance.

Introducing ControlNet

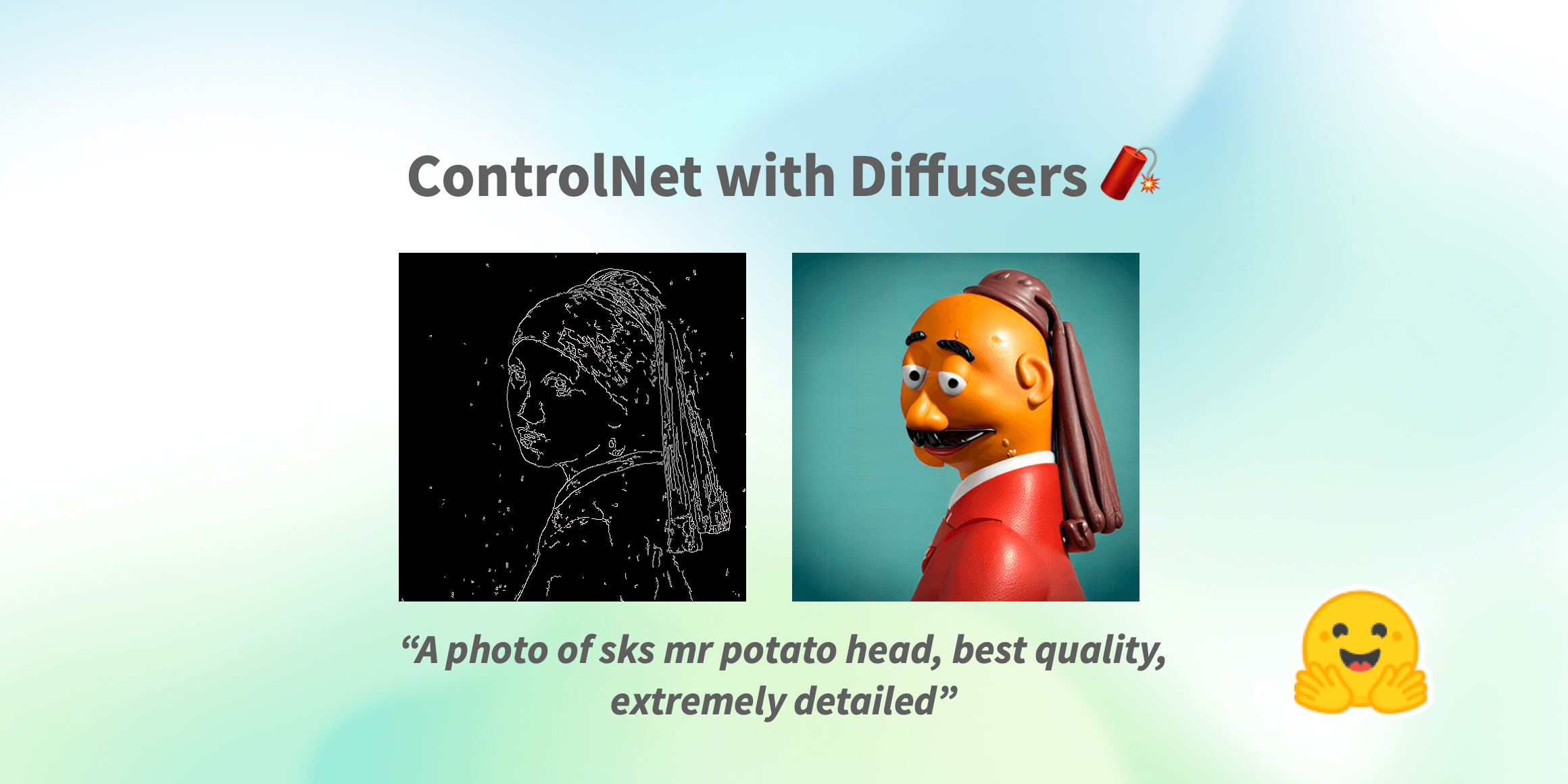

ControlNet, developed by Lvmin Zhang, the creator of Style to Paint, is a groundbreaking approach to AI image generation that generalizes the idea of "whatever-to-image". Unlike conventional text-to-image or image-to-image models, ControlNet is designed to improve user workflows significantly by providing greater control over image generations.

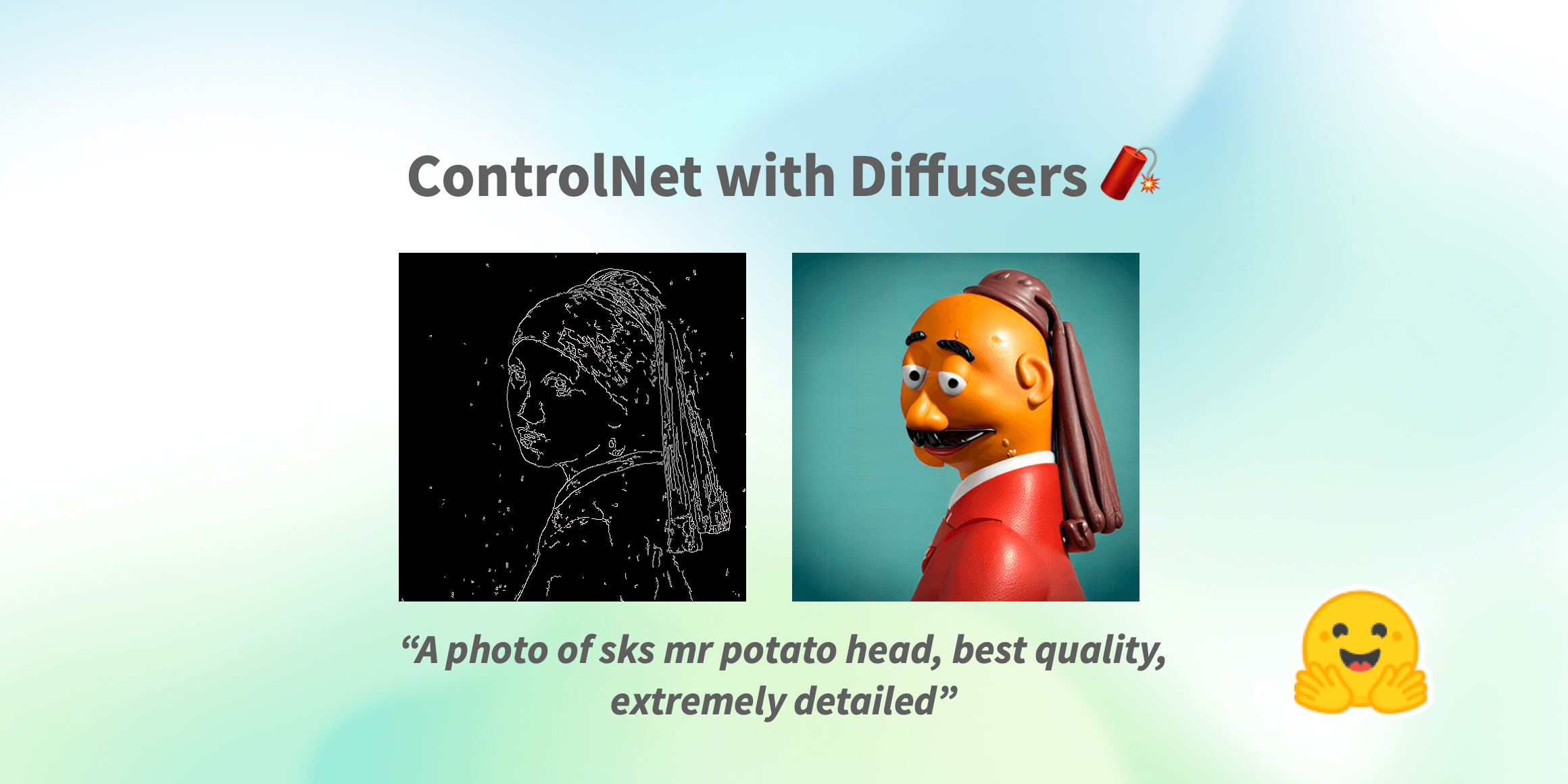

ControlNet Can Take This Inside Stable Diffusion:

Extract This:

Now You Can Do This:

Applications of ControlNet: From Human Pose-to-Image to Line Art Colorization

ControlNet's wide-ranging applications make it a valuable tool for various industries. Some examples include:

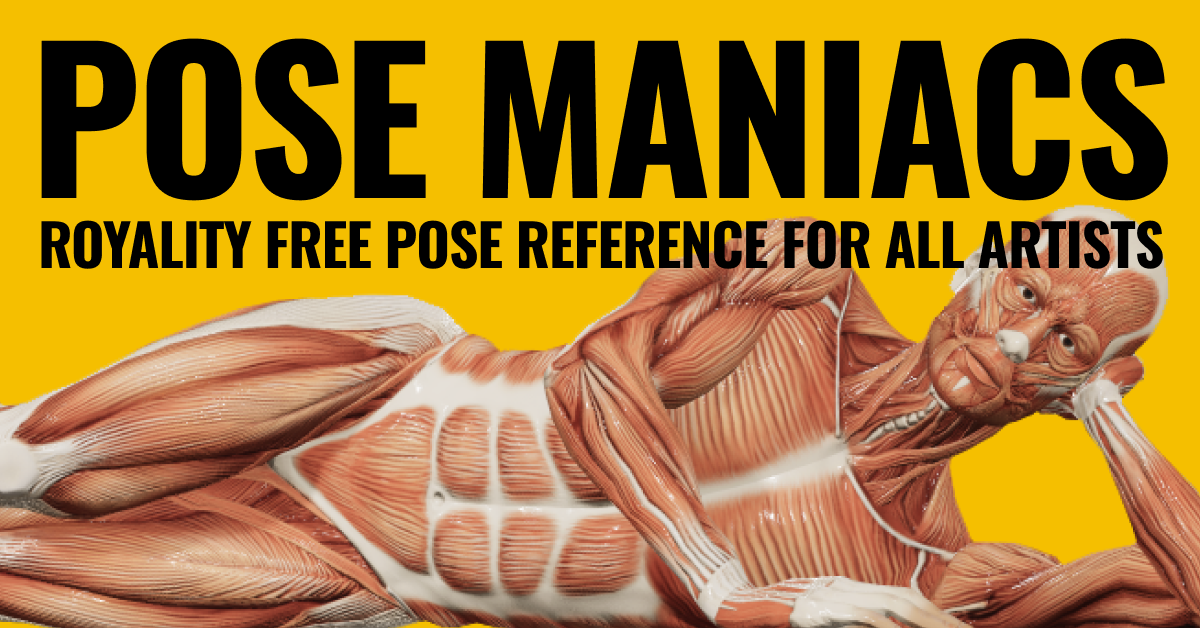

- Human Pose-to-Image: ControlNet generates clean, anatomically accurate images based on human poses. It even works well with poses where limbs are folded or not visible, ensuring faithful representation of the input poses.

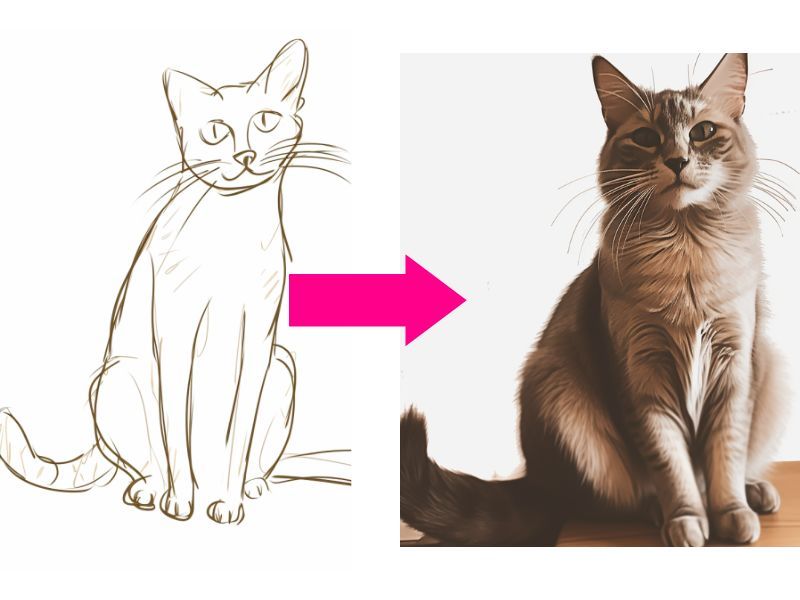

Scrible-to-image (My Favourite) - extraction of essential features from an initial sketch and the subsequent generation of detailed, high-resolution images that exhibit a remarkable level of accuracy. This feature also turns an actual photo into a scriblle and then generates images from that.

- Normal Map-to-Image: This application allows users to focus on the subject's coherency instead of the surroundings and depth, enabling more direct edits to the subject and background.

- Recoloring and stylizing - transforming images to realistic statues and paintings

- Style transfer - Transfer the style and pose of one image accurately to another

- Line Art Colorization: ControlNet's accurate preservation of line art details makes it a promising tool for colorizing black and white artwork. While this application is not yet released, it is expected to be available soon, addressing both technical and ethical concerns.

- 3D design and Re-skinning:

Overcoming Text-to-Image Limitations

One of the main limitations of text-to-image models is the difficulty in expressing certain ideas efficiently in text. ControlNet, however, overcomes this limitation by offering better control over image generation and conditioning. This improved control saves users time and effort when trying to express complex ideas through text.

ControlNet works by copying the weights of the neural network blocks into a locked copy and a trainable copy. While the locked copy preserves the model, the trainable copy learns the input conditions. This unique approach enables ControlNet to generate images with the quality of the original model without destroying the production-ready diffusion models.

ControlNets were proposed as a solution to train Stable Diffusion Models for specialized subtasks. Instead of training a single model, researchers devised a model training mechanism that leverages an external network to handle additional input conditions. This external network, also referred to as the "ControlNet," is responsible for processing depth maps or other conditioning input and integrating it with the main model.

The ControlNet Architecture

ControlNet combines both the stable diffusion model and an external network to create a new, enhanced model. The external network is responsible for processing the additional conditioning input, while the main model remains unchanged. The external network and the stable diffusion model work together, with the former pushing information into the latter, allowing the main model to take advantage of the new information.

The Technical Details of ControlNet

A single forward pass of a ControlNet model involves passing the input image, the prompt, and the extra conditioning information to both the external and frozen models. The information flows through both models simultaneously, with the external network providing additional information to the main model at specific points during the process. This enables the main model to utilize the extra conditioning information for improved performance on specialized subtasks.

How ControlNet Works

The ControlNet method involves several key steps:

a. Lock the original model: Instead of updating weights inside the main AI model, ControlNet locks the model, keeping its internal weights unchanged.

b. Create an external network: A separate external network is designed to understand and process the new inputs required for the subtask.

c. Train the external network: Using a stable diffusion training routine, the external network is trained with image-caption pairs and depth maps generated for each iteration.

d. Integrate the external and main models: The trained external network is then used to pass data into the main model, which ultimately improves the performance on the subtask.

The Benefits of ControlNet

ControlNet has gained popularity for several reasons:

a. Improved Results: Comparisons between ControlNet and other methods, such as Depth-to-Image, reveal that ControlNet produces higher-quality outputs with better fine-grain control.

b. Increased Efficiency: One of the most significant advantages of ControlNet is its efficiency. Training a ControlNet model requires an order of magnitude less time than the traditional approach. For instance, ControlNet can be trained in less than a week on a consumer-grade GPU, while other methods may take up to 2,000 GPU hours.

c. Preservation of Production-Ready Quality: By locking the main model and training only the external network, ControlNet aims to prevent overfitting and preserve the production-ready quality of large AI models.

Comparing ControlNet Approach to Other Methods

The ControlNet method offers an alternative approach to the standard paradigm of fine-tuning existing weights or training additional weights for specialized subtasks. For example, in the case of the Stable Diffusion Depth model, researchers trained the model using an extra channel for the depth map instead of freezing the model and using an external network. Comparing the two methods, the ControlNet approach shows potential for better performance and adaptability to various subtasks.

Enhanced Control for Higher Quality Image Generation

ControlNet's ability to handle higher quality input maps allows it to generate better images. For example, while the depth-through-image of the 2.1 stable diffusion model only takes in a 64x64 depth map, ControlNet can work with a 512x512 depth map. This enhanced control results in more accurate image generations, as the diffusion model can now follow the depth map more closely.

Impact on the Industry: A New Era of AI Image Generation

ControlNet's potential impact on the AI image generation industry is immense. Its generalizability and ability to handle various input conditions could revolutionize how major companies train and control their large diffusion models. The open-source nature of ControlNet has already garnered significant attention, with its GitHub page receiving 300 stars in just 24 hours, and 14.5K to date, without any promotion.

Real-World Applications: From Artistic Usage to Image Restoration

ControlNet's realistic capabilities open up new possibilities in artistic usage, architectural rendering, design brainstorming, storyboarding, and more. It also holds promise for highly accurate black-and-white image colorization and image restoration, thanks to its advanced control mechanisms.

Unanswered Questions

While ControlNet has shown promising results, several questions remain unanswered, such as why it is faster and why the images it produces are better than those from other methods. Researchers speculate that the locked main model provides a stable baseline that enables faster and more efficient fine-tuning, but further investigation is needed to confirm these theories.

Conclusion

The ControlNet method presents a promising way to enhance Stable Diffusion Models for specialized subtasks without sacrificing the wealth of knowledge already embedded in the model. By leveraging an external network to process additional input conditions and integrating it with the main model, researchers have opened up new possibilities for AI applications. While further study and experimentation are needed to fully understand the potential of ControlNet, their introduction marks an exciting development in the field of artificial intelligence. There are already several specific addons for ControlNet such as style transfer. We will look into these soon.

Resources:

References

Tools & Resources