The recent explosion of AI-generated art owes much to a single open-source technology - Stability AI's Stable Diffusion image generation model. This revolutionary system powers many of the top apps creating images from text prompts, including Lensa, DreamStudio, Playground AI, and more app that produce ai-generated images. But how do these apps produce these amazing images?

While each app applies Stable Diffusion uniquely, they all build on the core capabilities of this remarkably versatile generative model. In just months, Stable Diffusion has unlocked tremendous creative potential for artists and developers alike.

What is Stable Diffusion

At its core, Stable Diffusion is an image diffusion model. It utilizes a technique called latent diffusion to synthesize striking photographic images directly from textual descriptions. After compressing the image into a compact latent space, the AI iteratively adds and removes noise until the final output matches the prompt.

The results are extraordinary - with only a few words, Stable Diffusion conjures everything from intricate portraits to surreal fantasy landscapes. Yet as with any transformative technology, we must thoughtfully analyze its far-reaching implications.

As AI image synthesis continues rapidly evolving, Stable Diffusion marks a major milestone. Its open-source nature, active community, and remarkable outputs have made it an engine of innovation. But responsible guidance is still needed to steer these models toward creative enrichment rather than misuse. If harnessed carefully, generative AI promises to open new frontiers of human expression.

Understanding the Technology Behind Stable Diffusion

Stable Diffusion is a remarkable tool in the AI sphere that has revolutionized image generators. Emerging from the realm of Deep Learning in 2022, it leverages a text-to-image model, transforming textual descriptions into distinct images. At the heart of this technology lies the latent diffusion model, the framework that powers Stable Diffusion.

- A latent diffusion model employs latent spaces, enhancing the benefits of low-dimensional data representation.

- These latent spaces and diffusion models, with their noise management processes, serve as the basis for generating the image linked with the text.

Delving into Latent Space

Latent space, in essence, denotes the compressed data representation.Dimensionality reduction techniques like compression encode data in a more compact form by distilling key information into fewer dimensions. This condensed representation strips away nonessential details from the full, original encoding. The crux of the matter is in preserving the most relevant information while allowing for dimensionality reduction.

- A 20-dimensional vector, for instance, can be represented using a 10-dimensional vector, leading to a reduction in dimensionality.

- Latent spaces work effectively with diffusion models because they facilitate the isolation of crucial attributes from a vast dataset of detailed images.

- Convolutional neural networks serve as the tools to extricate these crucial attributes.

Firstly, How Diffusion Models Work

Diffusion models, essentially generative models, are trained to create data that mirror the input data they've been trained on. The core functioning of these models revolves around a process of adding Gaussian noise to the trained data, learning how to restore the original data by noise elimination.

- Forward diffusion denotes the process of increasing noise addition, leading to a point where the entire image morphs into noise.

- Backward diffusion refers to the reversal of the forward diffusion process, iteratively eliminating noise until the image is devoid of it.

- For noise estimation and elimination, the U-Net convolutional neural network is generally employed.

Forward Diffusion Process: Transforming Images to Noise

The forward diffusion process in Stable Diffusion is a key stage that plays a vital role in generating new data. Here are the details:

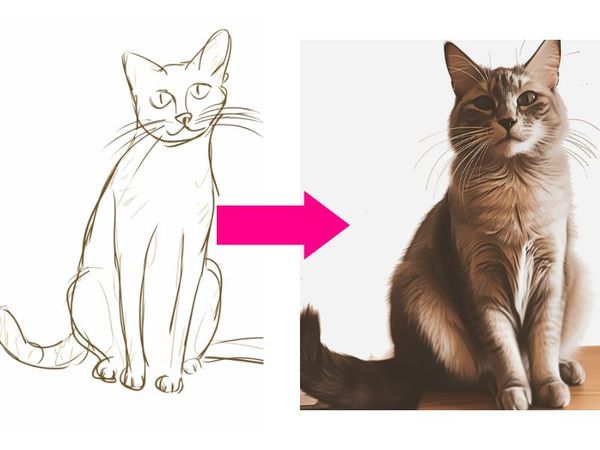

- Adding Noise: The process initiates by gradually adding noise to the training image, which progressively distorts the original data. For instance, a clear image of a cat or dog is slowly turned into a picture that is no longer distinguishable, resulting in a seemingly random noise image.

- Indistinguishable Origin: As the noise increases, it becomes impossible to discern the initial image's content. This process is analogous to a drop of ink dispersing in a glass of water. Over time, the ink diffuses until it is uniformly distributed throughout the water, making it impossible to identify where it was initially dropped.

- Significance: The forward diffusion process is crucial as it prepares the stage for the subsequent reverse diffusion process. By transforming the image into indistinguishable noise, it allows the model to rebuild the image from scratch, guided by the text prompt.

Here is a visual representation of a forward diffusion process:

The figure should be here displaying a transformation of a cat image into a random noise image.

Reverse Diffusion: Retracing Steps to Generate Meaningful Images

The thrilling aspect of Stable Diffusion lies in its ability to reverse the forward diffusion process. This is often called the denoising step. Here's what it entails:

- Rewinding Time: Imagine playing a video of the ink drop in water in reverse. As time rewinds, the diffused ink starts to concentrate back to its initial position. Similarly, in the context of Stable Diffusion, the model works backwards from the noisy, meaningless image to retrieve the original meaningful image - a cat or a dog.

- The Main Idea: The main idea of the reverse diffusion process is to rebuild an image starting from a noisy state, shaped by the specific text prompt. Even though the forward process turns any cat or dog image into indistinguishable noise, the reverse process can recover the original image guided by the text prompt.

- Two Parts of Diffusion: Each diffusion process consists of two parts: drift and random motion. In reverse diffusion, the drift is towards either cat or dog images, based on the input text. The model ensures that the result is a distinct and coherent image, not an ambiguous combination of multiple entities.

Hence, the reverse diffusion process is the creative genius behind Stable Diffusion's ability to generate images from text.

Training a Diffusion Model: Learning to Predict the Noise

The ingenious concept of reverse diffusion might prompt the question, "How is this possible?" The answer to this lies in training a neural network to predict the noise added to an image - a crucial component of Stable Diffusion known as the noise predictor, typically implemented as a U-Net model. The process of training this noise predictor unfolds as follows:

- Select a Training Image: Start by choosing a training image, which could be, for instance, a photograph of a cat.

- Generate Random Noise: Next, create a random noise image. This step involves the creation of random patterns that don't bear any resemblance to the original image.

- Corrupt the Training Image: Now, the training image is corrupted by adding the generated noise. This is done incrementally up to a certain number of steps, progressively distorting the original image into a state of noise.

- Train the Noise Predictor: The objective here is to enable the noise predictor to estimate how much noise was added to the original image. This is achieved by adjusting its weights while providing it with the correct answer. In other words, the model is taught to compare the noisy image with the original one and evaluate the degree of noise added.

After the training phase, the noise predictor becomes adept at estimating the noise added to an image, paving the way for the reverse diffusion process. It's now equipped to trace back from the noisy image, gradually eliminating the added noise, and regenerate the original image from the corresponding text prompt.

Reverse Diffusion: Decoding the Noise

Having trained the noise predictor, it's now time to see it in action, embarking on the journey of reverse diffusion. This process operates by gradually eliminating the noise from an image, thereby restoring its original form. Here is how the reverse diffusion process works:

- Generating Random Image: We begin by generating a completely random image that doesn't have any discernible features. This image is at a state where the noise has completely masked any identifiable aspects.

- Estimating Noise: The generated image is then presented to the trained noise predictor, which estimates the amount of noise present in the image.

- Subtracting Noise: Once we have an estimate of the noise, this is subtracted from the image, essentially starting the process of revealing the image beneath the noise.

- Repetition of Process: The previous two steps, estimating and subtracting the noise, are repeated multiple times. As the process unfolds, the image gradually transforms from an unrecognizable noise state to a familiar form - in our case, an image of either a cat or a dog.

At this stage, it is important to highlight that the generation of either a cat or a dog's image is unconditioned; we have no control over the outcome of the process. We'll tackle this issue when we delve into the subject of conditioning. But for now, it's quite remarkable to see the power of reverse diffusion at work.

It's also important to add that the diffusion model never really generates an actual image or images that it was trained on, but it's "own" interpretation of what this should look like.

For further insights into reverse diffusion sampling and the intricacies of samplers, you might find this article particularly informative.

How Stable Diffusion Works - Addressing The Challenges of Image Space and Computation Speed

Now, it's time for a reality check: the reverse diffusion process explained above does not exactly reflect how Stable Diffusion works. The crux of the matter lies in the fact that this process operates in the image space, which is computationally heavy and immensely slow. Running this kind of model on a single GPU is nearly impossible, not to mention trying to do so on a less powerful GPU, such as one that might be found in a standard laptop.

To give you a sense of scale, consider a standard image dimension of 512x512 pixels, each with three color channels (red, green, and blue). Each pixel's color is determined by the specific values across these three channels, which means that to define a single image, we need to specify values in a 786,432-dimensional space! That's an astronomical number of values for just one image.

Prominent diffusion models, such as Google's Imagen and OpenAI's DALL-E, operate in pixel space, which is a synonym for the high-dimensional image space mentioned above. While these models have utilized several strategies to enhance their computational speed, it's still not sufficient for practical applications, primarily due to the extensive computational resources required.

In a nutshell, while the concept of reverse diffusion is incredibly promising and exciting, it is not practical in its raw form, given the computational demands and processing power required. The solution, as we'll discuss in the next section, lies in the realm of latent space and a method known as Stable Diffusion.

Tackling Challenges: The Stability in Stable Diffusion

Despite their potential, diffusion models were initially bogged down by high computation costs and time consumption. Stable Diffusion emerged as the saviour, overcoming these issues.

- Instead of operating directly on the image, latent diffusion models function within the latent space.

- The image is encoded in a smaller space, allowing for noise addition and removal on a low-dimensional representation of the image, saving computational resources.

A Tweaked Stable Diffusion Process

While encoding images into the compact latent space representation does provide tremendous efficiency gains, it comes at the cost of losing some image details. The lower-dimensional latent space simply lacks the capacity to perfectly reproduce all nuances present in the original high-resolution image space.

This is where the decoder portion of the variational autoencoder (VAE) comes into play. The initial coarse image generated by decoding the latent vector lacks intricate textures, shadows, and other fine details. However, the decoder has learned to synthesize these finer elements during its training process.

So in the case of Stable Diffusion, the VAE decoder acts as a rendering network - taking the basic structural outline produced from the latent space vector and painting in realistic details through convolutional upsampling. The decoder inserts finer brush strokes, lighting effects, and textural elements that would have been discarded in the dimensionality reduction of the encoding process.

By leveraging the VAE decoder's talent for hallucinating realistic details, Stable Diffusion can compensate for the lossy compression of image information into the latent space. The combination of the compressed latent representation and the detail-enhancing decoder networks is what enables Stable Diffusion to generate intricate images so efficiently.

Steering the Image Generation with Text Conditioning and Embeddings

The text prompt is critical to guiding Stable Diffusion's image generation, transforming it from an uncontrolled process to controllable text-to-image synthesis.

The prompt is tokenized into numerical representations called embeddings. These embeddings capture semantic relationships between words. The text embeddings then go through additional processing by a transformer module.

Critically, the conditioned text embeddings are fed into the noise prediction U-Net at multiple points through a cross-attention mechanism. This allows the prompt to steer the iterative diffusion process toward images matching the description.

For example, with the prompt "A man with blue eyes", the words "blue" and "eyes" are linked. The model uses this relationship to generate a face with blue irises rather than unrelated blue elements. The prompt provides a conditioning signal that nudges the reverse diffusion toward the desired visual features.

Modifying the cross-attention module alone via techniques like Hypernetworks and LoRA is enough to fine-tune Stable Diffusion models. This demonstrates the pivotal role text conditioning plays in guiding the generative process.

Text Conditioning: From Prompt to Noise Predictor

- Tokenizer: The text prompt undergoes tokenization using a CLIP tokenizer, which converts words into numerical tokens. It's important to note that tokens are not always equivalent to individual words, as the tokenizer breaks down words it has seen during training. Tokens also include spaces, which are treated as separate entities.

- Embedding: Each token is assigned a unique 768-value vector known as an embedding. These embeddings capture the relationships between words, allowing the model to leverage semantic connections. Words with similar meanings or contexts tend to have embeddings that are closely related.

- Text Transformer: The embeddings are then processed by a text transformer, which acts as a universal adapter for conditioning. This transformer provides a mechanism for incorporating different conditioning modalities, such as class labels, images, or depth maps. It further refines the data before passing it on to the noise predictor.

Cross-Attention: Where Prompt Meets Image

The noise predictor integrates the text transformer's output at multiple points within the U-Net structure through cross-attention layers. This allows the prompt embeddings to guide the noise prediction process across the full generative pipeline.

This cross-attention ensures that the generated image aligns with the prompt by associating specific words within the prompt with corresponding image features. For example, if the prompt mentions "a man with blue eyes," the cross-attention mechanism pairs the words "blue" and "eyes" to guide the image generation process accordingly.

Other Conditionings and Enhancements

Text prompts are not the only form of conditioning in Stable Diffusion. Other methods, such as depth-to-image and control-net, enable the incorporation of additional factors to refine and control image generation. These techniques utilize depth maps, outlines, human poses, and other conditioning modalities to achieve precise control and enhance the quality of generated images.

Beyond Text: Expanding the Horizons of Stable Diffusion

Text prompts are not the only way to condition a Stable Diffusion model. Let's explore additional conditioning techniques that unlock new possibilities:

- Depth-to-Image Conditioning: Alongside text prompts, Stable Diffusion can be conditioned using depth images. This conditioning allows for the precise control of depth-to-image model outputs, adding depth and dimensionality to the generated images.

- ControlNet: ControlNet, another powerful conditioning mechanism, integrates various factors such as detected outlines and human poses. This approach empowers Stable Diffusion with exceptional control over image generation, opening up exciting avenues for creative expression.

Stepping Through Stable Diffusion: Image Generation Process

Now that we have a comprehensive understanding of the underlying theory, let's take a step-by-step look at the image generation process within Stable Diffusion:

Text-to-Image

- Random Tensor Generation: A random tensor is initially generated in the latent space, serving as the starting point for image generation. This tensor is controlled by setting the seed of the random number generator.

- Predicting Latent Noise: The U-Net noise prediction module ingests both the latent image embedded with noise and the text prompt. It then forecasts what the latent noise signal should be in order to eventually yield the image features described by the text. This predicted noise guides the subsequent steps of the generation process.

- Noise Subtraction: The predicted noise is subtracted from the latent image, resulting in a new latent image. This subtraction is repeated for a set number of sampling steps, refining the image at each iteration.

- Decoding to Pixel Space: Finally, the VAE decoder converts the refined latent image back into the pixel space, yielding the generated image after completing the Stable Diffusion process.

Text Conditioning: Unleashing the Power of Text-to-Image

The process begins with text conditioning, where the text prompt undergoes a fascinating transformation. Let's explore how this happens:

- Tokenizer: The text prompt is tokenized using CLIP's tokenizer. OpenAI's CLIP model pioneered a breakthrough in AI by producing textual captions to describe image contents. This innovative deep learning system can analyze visual inputs and generate intelligent text summarizing the key elements within them.Stable Diffusion v1 harnesses the power of CLIP's tokenizer to tokenize the prompt.

- Tokenization: Computers read numbers, not words. Tokenization converts each word in the prompt into a numerical representation known as a token. However, tokenization is not a one-to-one mapping. Some words are split into multiple tokens, while others may produce a single token.

- Token Embedding: Each token is then converted into a 768-value vector called an embedding. These embeddings capture the essence of the words and their relationships. For example, similar words like "man," "gentleman," and "guy" have nearly identical embeddings because they share similar meanings.

Embedding: Unleashing the Power of Word Relationships

The embeddings derived from the tokenization process play a crucial role in Stable Diffusion. Here's why embeddings are so important:

- Exploiting Word Relationships: Embeddings allow Stable Diffusion to leverage the relationships between words. Words that are closely related, such as synonyms or words within the same context, have embeddings that are similar. This enables Stable Diffusion to understand the nuances and connections between different words.

- Triggering Styles: Embeddings have the remarkable ability to trigger specific objects and styles. By finding the right embeddings, scientists have unlocked the power of fine-tuning models using a technique called textual inversion. This technique allows for precise control over object generation and style manipulation.

Feeding Embeddings to the Noise Predictor: Unlocking the Power of Conditioning

After generating the text embeddings, Stable Diffusion feeds them into a transformer module for additional processing to prepare the guidance signals before injecting them into the noise predictor. Here's how this conditioning process works:

- Text Transformer: The text transformer acts as a universal adapter for conditioning. It takes in the text embedding vectors and performs additional processing. This transformer not only enhances the data but also allows for the inclusion of various conditioning modalities, such as class labels, images, and depth maps.

Cross-Attention: Where the Magic Happens

The output of the text transformer is utilized by the noise predictor through a cross-attention mechanism, which forms the heart of Stable Diffusion's image generation. Let's take a closer look at this pivotal process:

- Prompt and Image Interaction: The cross-attention mechanism brings together the prompt and the image, ensuring they work in harmony.For instance, if the text prompt describes "A majestic lion with a flowing mane," Stable Diffusion correlates the words "lion" and "mane" through self-attention within the prompt. This guides the model to generate a lion's head with a full mane rather than say, a mane on a zebra. This information is then used to steer the reverse diffusion towards generating images featuring individuals with blue eyes (cross-attention between the prompt and the image).

- Unleashing Styles: Remarkably, the cross-attention network also plays a role in style manipulation. Techniques like Hypernetwork and LoRA models utilize this module to fine-tune Stable Diffusion models and introduce specific styles. It demonstrates the crucial significance of this module in the overall functioning of Stable Diffusion.

Noise Schedule: Unveiling the Evolution

The noise schedule in Stable Diffusion determines the magnitude of noise subtraction at each sampling step. By adjusting the noise schedule, it is possible to control the transformation of the image from noisy to clean. This process ensures that the diffusion converges towards the desired image while preserving the finer details and characteristics.

Classifier-Free Guidance: Steering the Diffusion

To further enhance the guidance and control of Stable Diffusion, researchers introduced Classifier-Free Guidance (CFG). While the traditional approach relied on a separate classifier model, CFG leverages image captions to condition the diffusion process without the need for a classifier. The CFG scale parameter controls the influence of the text prompt on the diffusion process, allowing users to strike a balance between unconditioned and prompt-guided image generation.

Larger Text Encoders and Open Data Improve Image Quality

When Stable Diffusion version 2 was released, it switched from using CLIP to the larger OpenCLIP text encoder model. This change helped improve the quality of the generated images.

There are two main reasons behind this upgrade:

- Bigger text model capacity. OpenCLIP has 5x more parameters than the CLIP model used in version 1. This increased capacity allows it to develop richer representations of language that better guide the image generation process.

Open training data. While CLIP is open-source, it was trained on proprietary data from Anthropic. In contrast, OpenCLIP was trained on open datasets.

Switching to OpenCLIP gives researchers full transparency into the text encoder training, allowing better study and optimization of Stable Diffusion. Relying less on private data is important for the project's open-source ethos and long-term progress.

The combination of more model capacity and transparent training data enabled OpenCLIP to produce text embeddings that lead to higher quality image generation compared to CLIP. This change was an important step in the evolution of Stable Diffusion.

Using Negative Prompts to Steer Image Generation

In addition to providing descriptive text prompts, users can also guide Stable Diffusion's image generation process through "negative prompts".

Negative prompts allow specifying terms and concepts that the model should avoid when synthesizing an image. This gives artists more control over steering the output away from unwanted elements.

For example, a prompt like:

"A lush green landscape, dramatic sunset sky, negative prompt: people, cars, buildings"

Would guide the model to generate an outdoor scene without populated elements like people, vehicles, or architecture.

Negative prompts act as repellants, pushing the image synthesis away from unwanted visual features. They work by downweighting parts of the text embeddings that correspond to those unwanted elements.

This method of selectively minimizing parts of the text guidance signal complements positive descriptive prompts. Used together, they allow users to sculpt generated images with precision.

Several front-end services like Stability AI's DreamStudio cloud incorporate negative prompts as a key feature for shaping AI artistry. By expanding control over the text conditioning, negative prompts unlock new creative directions.

The Creative Possibilities Unlocked by Stable Diffusion

Because Stable Diffusion does not claim copyright on its outputs, retains an open-source ethos, and allows for significant user creativity, it has become enormously popular among digital artists. Users can guide the AI to render almost anything they can describe in text, from photorealistic portraits to surreal landscapes.

Some examples demonstrate the creative potential:

- A vivid painting of a fox spirit standing in a moonlit forest clearing

- A Pikachu happily fine dining while overlooking the Eiffel Tower

- An astronaut riding a Pegasus through a nebula

The ease of generating such visually compelling images with only text prompts unlocks new directions for artists aiming to augment their workflows.

Confronting the Societal Impacts of Generative AI

However, Stable Diffusion also raises concerns about how AI art may disrupt creative sectors, enable the spread of misinformation, and lead to other issues. For example, some worry it could facilitate making non-consensual fake imagery.

As this technology continues advancing rapidly, it will be critical to consider:

- How to properly credit AI systems without stifling artistic creativity.

- The need for transparency when AI generated content is produced or shared online.

- Ongoing safety research to prevent harmful use cases.

Exploring Stability AI's Latest Innovation: SDXL2

Stability AI continues to push boundaries in AI image generation with their new SDXL2 model. This advanced system introduces several notable new capabilities:

- Massive scale - SDXL2 boasts 3.5 billion parameters in its base model, and an ensemble version with 6.6 billion parameters. This represents a huge increase over previous models.

- Leveraging large language models - SDXL2 utilizes one of the largest open source Clip models created so far for unmatched text-to-image prompting.

- Accessible system requirements - Despite its formidable outputs, SDXL2 is designed to run on modern consumer GPUs, making it achievable for many users to experience.

- Open source - The code for SDXL2 is openly available on GitHub for community learning and contribution.

- Free tier - SDXL2 offers free generation of 400 images per day to make the technology widely accessible.

- Versatile features - Users can leverage image-to-image prompting, inpainting, outpainting and other capabilities for enhanced creativity.

- Image upscalining -intuitive image upscaling and more.

By combining massive scale, leading-edge clip guidance, approachable requirements and open availability, SDXL2 represents an exciting innovation in Stability AI's mission to progress and democratize AI art generation. The release promises to unlock new creative horizons for many users. SDXL2 is currently available in Stability AI's own Dreamstudio cloud service.

Guiding Stable Diffusion's Continued Evolution

The advent of Stable Diffusion has unlocked remarkable new creative potential through AI image generation. However, as with any powerful technology, it also brings new responsibilities.

As Stable Diffusion continues advancing rapidly, developers must maintain an ethical compass. Efforts like expanding safety research, improving transparency, and enabling proper attribution will be vital.

If harnessed carefully, Stable Diffusion promises to augment human creativity, not replace it. The technology itself is neutral - its impacts depend on how people wield it. Maintaining an open and collaborative community focused on positive progress will be key.

There will always be setbacks and course corrections on the path ahead. But if society can embrace the spirit of using AI to expand possibilities for all, the future looks bright. Stable Diffusion has already changed the landscape of generative art. Guided by human wisdom, its next chapters could unlock wonders we can only begin to imagine.

Summary

- Stable Diffusion leverages latent diffusion models to efficiently synthesize images from text prompts.

- Key innovations like compressed latent spaces and fast noise prediction enabled its breakthrough.

- Text embeddings allow steering the image generation via conditioning.

- An active open-source community empowers rapid advancement.

- Impressive creative potential is unlocked, but ethical risks require vigilance.

- With thoughtful guidance, Stable Diffusion can augment human creativity and open new frontiers of expression.

In summary, this versatile generative model has already reshaped the AI art world. But the journey has only just begun. Handled carefully, its future promises to be rich with creativity and possibility.